Mathematics, 19.03.2020 03:03 Brittpaulina

In these cases, we might try to correct for noise while training the classifier. Consider the following formulation for training a logistic regression classifier w ∈ R d on a noisy training data set (x (1), y(1)), . . . ,(x (n) , y(n) ), where for each i, y (i) ∈ {−1, +1}. For simplicity, we ignore the bias term b. Suppose we know that the noise magnitude is at most r. Then, instead of the standard logistic regression loss, we might want to minimize the following loss: L˜(w) = Pn i=1 L˜ i(w), where, L˜ i(w) = max z (i):kz (i)−x(i)k≤r log(1 + exp(−y (i)w >z (i) )), where kvk means the L2-norm of vector v. (a) (5 points) Prove that for all i, L˜ i(w) = Mi(w), where Mi(w) = log(1 + exp(rkwk − y (i)w >x (i) )). For full credit, show all the steps in your proof.

Answers: 2

Another question on Mathematics

Mathematics, 21.06.2019 17:00

Tom had a total of $220 and he spent $35 on a basketball ticket . what percent of his money did he have left?

Answers: 1

Mathematics, 21.06.2019 21:00

The ph level of a blueberry is 3.1 what is the hydrogen-ion concentration [h+] for the blueberry

Answers: 2

Mathematics, 22.06.2019 00:00

What is 617.3 miles and each gallon of gas the car can travel 41 miles select a reasonable estimate of the number of gallons of gas karl used.

Answers: 1

Mathematics, 22.06.2019 01:40

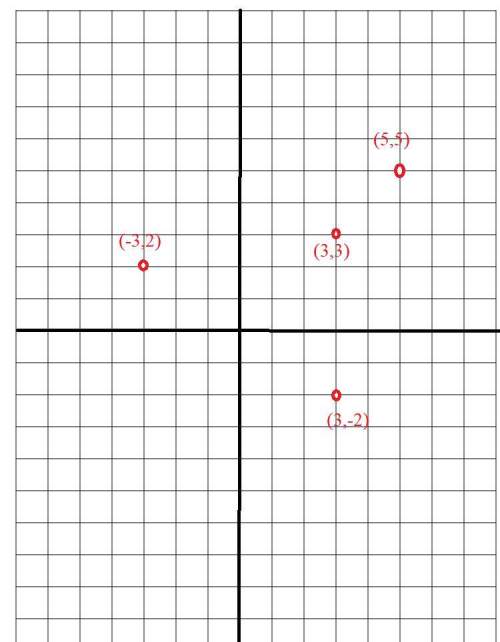

Given: prst square pmkd is a square pr = a, pd = a find the area of pmct.

Answers: 3

You know the right answer?

In these cases, we might try to correct for noise while training the classifier. Consider the follow...

Questions

Social Studies, 06.03.2022 07:30

Chemistry, 06.03.2022 07:30

Mathematics, 06.03.2022 07:40

Health, 06.03.2022 07:40