Physics, 02.12.2020 16:20 cocodemain

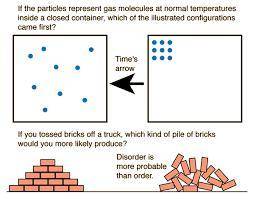

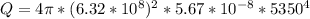

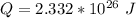

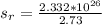

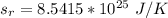

Consider a star that is a sphere with a radius of 6.32 108 m and an average surface temperature of 5350 K. Determine the amount by which the star's thermal radiation increases the entropy of the entire universe each second. Assume that the star is a perfect blackbody, and that the average temperature of the rest of the universe is 2.73 K. Do not consider the thermal radiation absorbed by the star from the rest of the universe. J/K

Answers: 1

Another question on Physics

Physics, 21.06.2019 19:30

What is hidden during a lunar eclipse? isn't the moon hidden?

Answers: 2

Physics, 22.06.2019 08:30

What object a collides with object b and bounces back its final momentum is?

Answers: 1

Physics, 23.06.2019 00:30

What os the equation of the line described below written in slope-intercept form? the line passing through point (0,0) and parallel to the line whose equation is 3x+2y-6=0

Answers: 3

You know the right answer?

Consider a star that is a sphere with a radius of 6.32 108 m and an average surface temperature of 5...

Questions

Biology, 19.09.2019 14:50

Chemistry, 19.09.2019 14:50

Social Studies, 19.09.2019 14:50

Business, 19.09.2019 14:50

Biology, 19.09.2019 14:50

Computers and Technology, 19.09.2019 14:50

Mathematics, 19.09.2019 14:50

Geography, 19.09.2019 14:50

Geography, 19.09.2019 14:50

History, 19.09.2019 14:50

is the entropy of the rest of the universe which is mathematically represented as

is the entropy of the rest of the universe which is mathematically represented as

is the Stefan-Boltzmann constant with value

is the Stefan-Boltzmann constant with value

is the entropy of the rest of the universe which is mathematically represented as

is the entropy of the rest of the universe which is mathematically represented as